Artificial Intelligence standard to help industries mitigate bias in AI systems

Statements

The uptake of Artificial Intelligence technologies (AI) has been swift, but with it has come the risk of embedding unwanted bias in the systems.

Biases - whether, social, data or cognitive - originate with base data and algorithms at the core of AI and can result in mistrust in these emergent systems.*

However, Standards Australia experts are working hard to overcome the potential for unwanted bias – a critical example is the adoption of the SA TR ISO/IEC 24027:2022, Information technology — Artificial intelligence (AI) — Bias in AI systems and AI aided decision making by the IT- 043 committee, Artificial Intelligence. This standard provides requirements to help ensure AI technologies and systems meet critical objectives for functionality, interoperability and trustworthiness.

SA TR ISO/IEC 24027:2022 is an identical adoption of the ISO/IEC TR 24027:2021 standard, and also specifies the measurement techniques and methods for assessing bias with the intention to address bias-related vulnerabilities.

According to Aurelie Jacquet, Chair of the IT-043 committee leading the adoption - the standard provides guidance for organisations to identify and manage unwanted bias in AI systems.

“As fairness is one of the eight’s Australian AI ethics principles, there have been many debates around what bias and fairness means and how to implement this principle in practice.”

“SA TR ISO/IEC 24027:2022 is invaluable because it provides guidance on how to assess bias and fairness through metrics and on how to address and manage unwanted bias, it can effectively help organisation operationalise our AI fairness principle,” Ms Jacquet says.

“This standard helps provide best practice that is recognised internationally and that has always been helpful when regulation is approaching closer and closer to really understand what's expected and what best practice looks like.”

David Wotton (TGA representative), the IT-043 Working Group 3 - Trustworthiness Convenor, said that "SA TR ISO/IEC 24027:2022 would help with identifying and addressing different forms of bias".

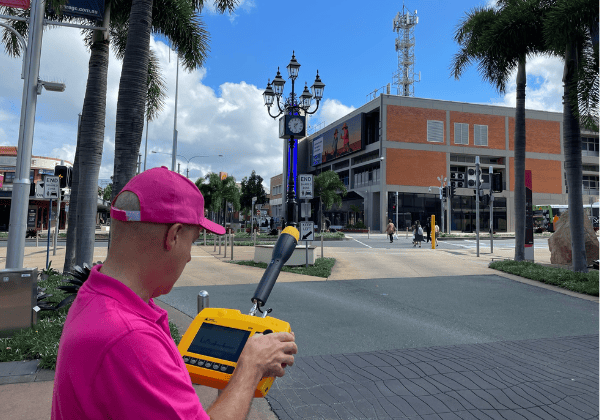

AI has many valuable applications – it can be used to drive robots used for disaster recovery, help medical devices better detect diseases such as cancer, and help us to solve complex problems such as fraud detection, weather forecasting, climate change and spam detection.

Standards Australia’s Artificial Intelligence Roadmap provides recommendations that outline the interests of Australian stakeholders in the use of AI.

The organisation is also continuing to work with stakeholders in industry and government to ensure that Australia’s voice is heard internationally and influence International AI standards development.

*(Bias in artificial intelligence systems can manifest in different ways, while some bias is necessary to address AI system objectives, there can be bias that is not intended in the objectives and thus represent unwanted bias in the AI system).

The uptake of Artificial Intelligence technologies (AI) has been swift, but with it has come the risk of embedding unwanted bias in the systems.

Biases - whether, social, data or cognitive - originate with base data and algorithms at the core of AI and can result in mistrust in these emergent systems.*

However, Standards Australia experts are working hard to overcome the potential for unwanted bias – a critical example is the adoption of the SA TR ISO/IEC 24027:2022, Information technology — Artificial intelligence (AI) — Bias in AI systems and AI aided decision making by the IT- 043 committee, Artificial Intelligence. This standard provides requirements to help ensure AI technologies and systems meet critical objectives for functionality, interoperability and trustworthiness.

SA TR ISO/IEC 24027:2022 is an identical adoption of the ISO/IEC TR 24027:2021 standard, and also specifies the measurement techniques and methods for assessing bias with the intention to address bias-related vulnerabilities.

According to Aurelie Jacquet, Chair of the IT-043 committee leading the adoption - the standard provides guidance for organisations to identify and manage unwanted bias in AI systems.

“As fairness is one of the eight’s Australian AI ethics principles, there have been many debates around what bias and fairness means and how to implement this principle in practice.”

“SA TR ISO/IEC 24027:2022 is invaluable because it provides guidance on how to assess bias and fairness through metrics and on how to address and manage unwanted bias, it can effectively help organisation operationalise our AI fairness principle,” Ms Jacquet says.

“This standard helps provide best practice that is recognised internationally and that has always been helpful when regulation is approaching closer and closer to really understand what's expected and what best practice looks like.”

David Wotton (TGA representative), the IT-043 Working Group 3 - Trustworthiness Convenor, said that "SA TR ISO/IEC 24027:2022 would help with identifying and addressing different forms of bias".

AI has many valuable applications – it can be used to drive robots used for disaster recovery, help medical devices better detect diseases such as cancer, and help us to solve complex problems such as fraud detection, weather forecasting, climate change and spam detection.

Standards Australia’s Artificial Intelligence Roadmap provides recommendations that outline the interests of Australian stakeholders in the use of AI.

The organisation is also continuing to work with stakeholders in industry and government to ensure that Australia’s voice is heard internationally and influence International AI standards development.

*(Bias in artificial intelligence systems can manifest in different ways, while some bias is necessary to address AI system objectives, there can be bias that is not intended in the objectives and thus represent unwanted bias in the AI system).

Email:

Email: